Introduction

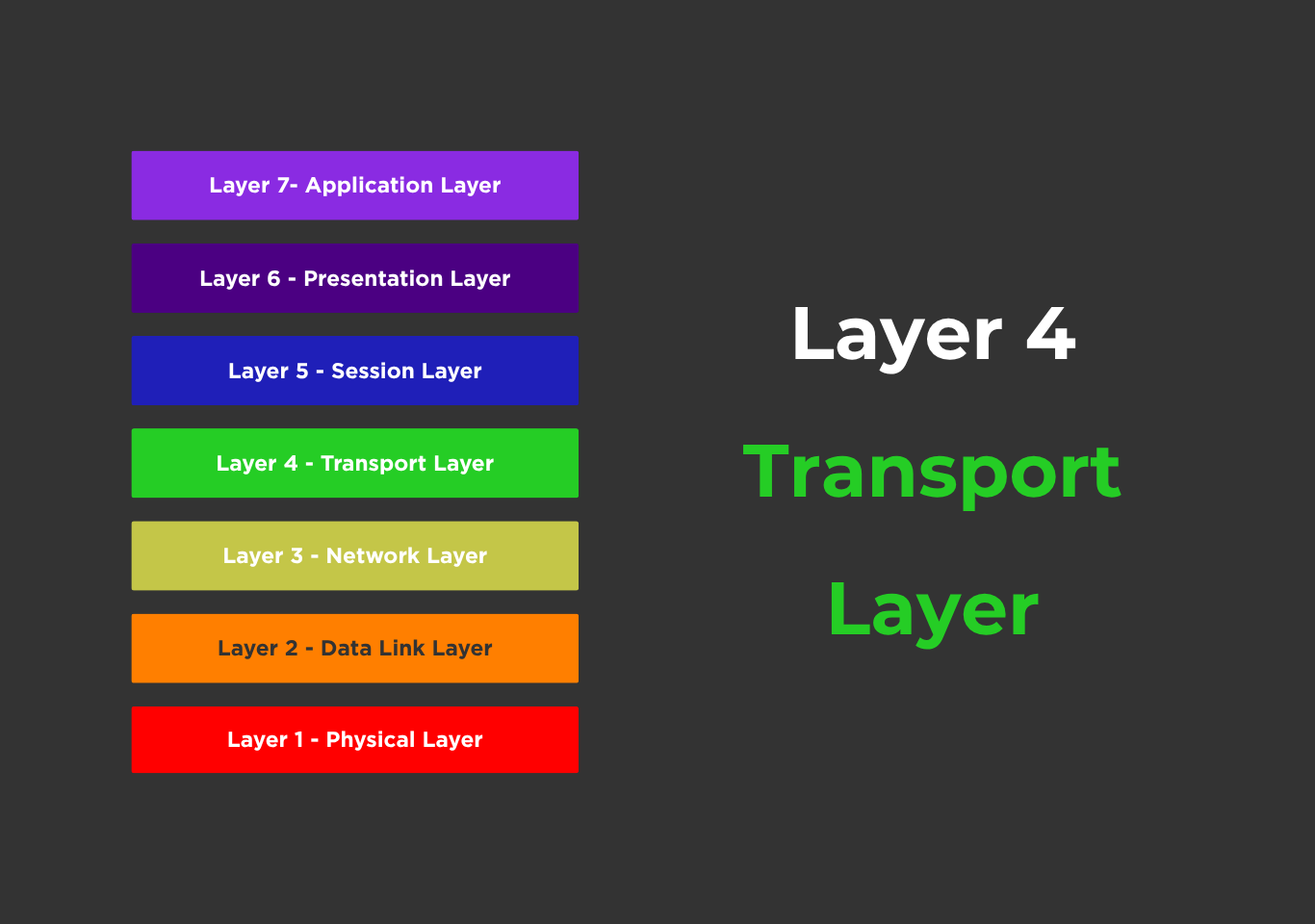

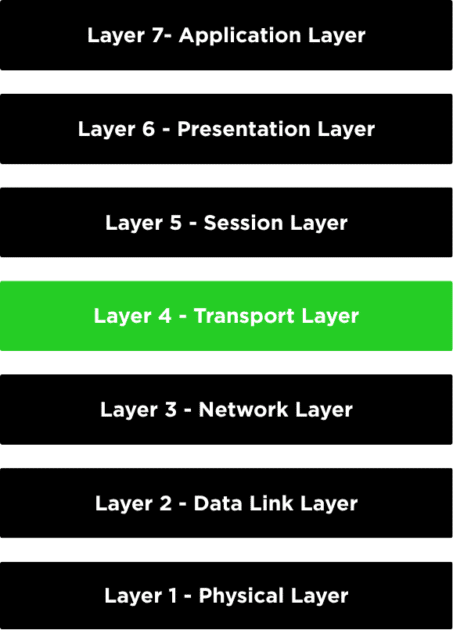

The transport layer is the fourth layer in the OSI (Open Systems Interconnection) model and is responsible for the reliable delivery of data and information between two different devices.

The transport layer acts as an intermediary between the network layer, which handles the delivery of packets to the correct machine, and the session layer, which manages communication sessions. The primary responsibility of the transport layer is to deliver data reliably, efficiently, and in the correct order from the source to the destination application. The transport layer is responsible for setting up the actual mechanics, maintaining and tearing down connections, and providing reliable and unreliable data delivery.

For reliable connections, the transport layer is responsible for error detection and correction. When an error is detected, the transport layer will resend the data. For unreliable connections, the transport layer provides only error detection; error correction is left to a higher layer. This layer ensures that the message arrives error-free, intact, and in order with flow control. For security, the layer may create a connection between two end nodes.

While the Network layer handles addressing and routes data between hosts, the Transport layer handles process-to-process communication. It ensures that a message from an application on one host reaches the correct application on another. It abstracts away many of the complexities of network routing, packet forwarding, and physical link details so that applications do not need to worry about them. For example, clients and servers just send data via sockets or ports; the transport layer handles segmentation, reliability, ordering, flow control, and more.

In short:

- Provides reliable and unreliable delivery of data across the connection.

- Segmenting data into smaller and more manageable sizes.

- Allowing multiplexing connections and multiple applications to send and receive data simultaneously.

- Layer4 uses some standard protocols such as TCP, UDP, DCCP, etc to enhance its functionalities.

- This layer treats each packet independently because each packet belongs to a different message.

- Layer 4 ensures that each message reaches its destination completely.

- All layer 4 protocols provide multiplexing/demultiplexing service.

Core functions

Segmentation and Reassembly

The Transport Layer separates large data streams from the Application Layer into smaller, manageable segments before transmission. This procedure is known as segmentation, which ensures the data fits within the network’s Maximum Transmission Unit (MTU) and can be efficiently transmitted across different routes. Each segment is labeled with sequence numbers and headers that include source and destination ports, allowing accurate reassembly on the receiver’s side.

When the data reaches its destination, the Transport Layer reassembles these segments into the original message, ensuring data integrity and correct order. This process allows for smooth, reliable communication between devices even when data takes multiple paths through the network. It also enables error checking using checksums to detect corruption during transit.

Process-to-process communication

Unlike the Network Layer, which concentrates on host-to-host delivery via IP addresses, the Transport Layer handles process-to-process communication via port numbers. Each application or service running on a device is assigned a distinct port. The Transport Layer adds these port numbers to the header of each data segment, ensuring that the message is delivered to the appropriate application on the receiving host.

For example, web servers commonly utilize ports 80 (HTTP) or 443 (HTTPS), whereas email uses port 25 (SMTP). This method enables many applications to connect without interference. Multiplexing allows data from several processes to share a single network connection, while demultiplexing ensures that incoming segments are routed to the correct application.

Reliable data transfer

One of the sppecial characteristics of the Transport Layer is reliability. Protocols such as TCP ensure that each segment transmitted is delivered accurately and in order. This includes methods like acknowledgments (ACKs), retransmissions, and checksums. When a segment arrives at its destination, the receiver sends an acknowledgment to indicate successful receipt.

If an acknowledgment is not received within a timeout period, the sender resends the missing segment. Header sequence numbers ensure that data arrives in the correct order, even if packets travel various paths. Error detection utilizes checksums to ensure integrity; damaged segments are discarded and reissued. These operations make the Transport Layer excellent for applications that require precise data, such as file transfers and emails.

Flow control

Flow control limits the amount of data that can be sent before receiving an acknowledgment, preventing the sender from overwhelming the receiver. The Transport Layer employs a sliding window protocol in which the receiver broadcasts its window size, indicating how much data it can process. The sender’s transmission rate must be adjusted to fit this capability. If the receiver’s buffer becomes full, it can limit the window size, slowing down incoming data.

This approach ensures efficient communication while preventing data loss due to buffer overflow. TCP uses dynamic modifications to perform flow management, allowing for maximum throughput while avoiding congestion. This function is critical for maintaining synchronization between transmitter and receiver speeds, particularly across networks with fluctuating bandwidth or latency. Without flow control, systems could experience dropped packets, retransmissions, and significant performance degradation.

Congestion Control

Congestion control prevents the network from becoming overloaded, which can result in packet loss and delays. The Transport Layer—specifically, TCP—monitors network conditions and changes transmission rates accordingly. Slow Start, Additive Increase/Multiplicative Decrease (AIMD), and Fast Recovery are techniques for dynamically managing congestion. TCP begins slowly and steadily increases speed until packet loss indicates congestion.

At that point, it abruptly cuts the rate to relieve network strain. This adaptive system maintains a balance of throughput and stability. Congestion control improves both the sender and the network by improving data flow and avoiding bottlenecks in routers or intermediary devices. It is crucial in large-scale systems such as cloud services, where hundreds of connections exist simultaneously. Effective congestion control promotes fairness and stability among competing connections.

Connection Establishment and Termination

The Transport Layer is in charge of managing virtual connections between two hosts, including their establishment and termination. In TCP, this is accomplished through a three-way handshake for connection establishment, SYN, SYN-ACK, and ACK messages synchronize sequence numbers and indicate readiness for data transfer. Once communication is complete, the connection is terminated in four steps using the FIN and ACK flags. T

his guarantees that all parties recognize the session closing, avoiding data loss or sudden termination. Connection management enables for efficient communication and resource allocation during the session. It ensures synchronization between sender and receiver, allowing programs to remain reliable and consistent. TCP’s structured approach assures controlled communication, making it excellent for data-sensitive applications such as banking or file transfers. In contrast, UDP is connectionless and sends datagrams without setup or teardown.

Transport layer protocols

The protocols that operate at transport layers are TCP and UDP.

Transmission Control Protocol

The Transmission Control Protocol (TCP) is a connection-oriented protocol that allows devices to communicate reliably across a network. It builds a virtual connection before delivering data to ensure that packets are delivered in order and without mistakes. TCP establishes a session between sender and receiver using a three-way handshake (SYN, SYN-ACK, ACK). During data transmission, TCP gives sequence numbers to each segment, allowing the recipient to properly reassemble data. Errors are detected using checksums, and lost or damaged packets are retransmitted. TCP also uses flow control via a sliding window technique to prevent data overflow at the receiver’s end and congestion control to avoid network congestion.

User Datagram Protocol

The User Datagram Protocol (UDP) is a connectionless and lightweight transport layer protocol that allows for quick data delivery without requiring a prior connection. Unlike TCP, UDP makes no guarantees about packet delivery, order, or error correction. Each packet, known as a datagram, is treated independently and may travel several paths to its destination. UDP’s simplicity reduces latency and overhead, making it ideal for real-time applications like online gaming, live streaming, VoIP, and video conferencing, where speed is more important than reliability. It performs limited error checking with checksums but no retransmissions or acknowledgments. UDP is frequently used for multicasting and broadcasting, which enables a single message to reach numerous recipients effectively.

| Feature | TCP (Transmission Control Protocol) | UDP (User Datagram Protocol) |

|---|---|---|

| Connection Type | Connection-oriented; establishes a session using a three-way handshake before data transfer. | Connectionless; sends data without establishing a connection. |

| Reliability | Reliable; ensures error-free, ordered, and complete delivery using acknowledgments and retransmissions. | Unreliable; no guarantee of delivery, order, or error correction. |

| Data Flow Control | Uses flow control (sliding window) to prevent receiver overload. | No flow control mechanism. |

| Congestion Control | Implements congestion control algorithms like Slow Start and AIMD. | No congestion control; transmits data at constant rate. |

| Speed | Slower due to overhead of connection setup and reliability checks. | Faster; minimal overhead makes it ideal for real-time applications. |

| Header Size | Larger header (20–60 bytes) with multiple control fields. | Smaller header (8 bytes) with minimal fields. |

| Use Cases | Used for applications requiring reliability (e.g., HTTP, FTP, email). | Used for time-sensitive or real-time applications (e.g., DNS, VoIP, online gaming). |

Security in the Transport Layer

The transport layer’s security focuses on ensuring data integrity as it flows between network applications. While TCP and UDP handle data delivery, they do not provide built-in encryption or authentication. To remedy this, Transport Layer Security (TLS) and its precursor, Secure Sockets Layer (SSL), were created. TLS operates above TCP, ensuring the confidentiality, integrity, and authentication of transmitted data. It protects data from interception using encryption techniques like AES and RSA, as well as message authentication codes (MACs) to ensure data integrity.

Before data transfer begins, the TLS handshake process uses digital certificates to verify both the client and the server and to establish safe encryption keys. This protects critical information such as passwords, financial details, and personal data against eavesdropping or alteration. TLS is commonly used for secure online browsing (HTTPS), email protocols (SMTPS, IMAPS), and VPNs. UDP-based applications, on the other hand, can employ DTLS (Datagram TLS) to provide similar security while maintaining low latency. In modern networks, Transport Layer Security is critical for maintaining trust and privacy, and it serves as the foundation for secure Internet communication.

Benefits and Limitations

Benefits

- Ensures reliable data delivery through error detection, correction, and retransmission mechanisms.

- Provides flow control to prevent sender overload and maintain balanced communication.

- Supports segmentation and reassembly, allowing large data streams to be transmitted efficiently.

- Enables multiplexing, letting multiple applications share a single network connection.

- Establishes end-to-end communication between source and destination systems.

- Offers connection management, handling setup and termination of sessions automatically.

- Enhances data integrity and user experience by ensuring ordered and complete transmission.

Limitations

- High overhead due to acknowledgment, sequencing, and retransmission processes in TCP.

- Increased latency because of connection setup and flow control mechanisms.

- Consumes more system resources (CPU, memory) to maintain reliability and error handling.

- Complex implementation compared to simpler protocols like UDP.

- Performance may degrade under heavy network congestion despite control algorithms.

- Lacks built-in encryption or authentication, requiring additional security protocols.

- Some applications needing real-time speed (e.g., gaming, streaming) may find transport-layer reliability too slow or inefficient.